Analysis of mainstream gesture recognition technology helps improve VR/AR device experience

The improvement of the virtual reality device experience is closely related to the processor and display technology, and the interaction with the virtual reality device is also very important. Voice, body, and gesture recognition can be an interactive way to enhance the experience, whether in VR or AR devices. This article will introduce the mainstream optical gesture recognition technology.

Talking about gesture recognition technology, from simple to complex to fine, can be roughly divided into three levels: two-dimensional hand recognition, two-dimensional gesture recognition, three-dimensional gesture recognition.

Before we discuss gesture recognition in detail, we need to know the difference between 2D and 3D. Two-dimensional is just a plane space. We can use the coordinate information composed of (X coordinate, Y coordinate) to represent the coordinate position of an object in two-dimensional space, just like a picture appears on a wall. 3D adds information on the "depth" (Z coordinate) based on this, which is not included in 2D. The "depth" here is not the depth we say in real life. This "depth" expresses "depth", which is understood to be more appropriate than the "distance" of the eye. It's like a goldfish in a fish tank. It can swim up and down in front of you, or it can be farther or closer to you.

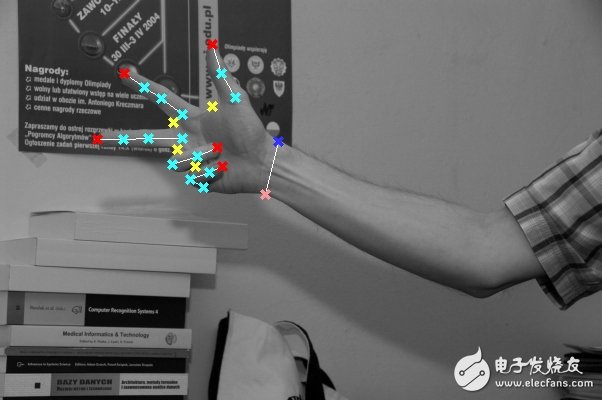

The first two gesture recognition technologies are based entirely on the two-dimensional level, and they only need two-dimensional information without depth information as input. Just as the photos taken from the usual photos contain two-dimensional information, we only need to use the two-dimensional image captured by a single camera as input, and then analyze the input two-dimensional image through computer vision technology to obtain information, thereby realizing gestures. Identification.

The third gesture recognition technology is based on the three-dimensional level. The most fundamental difference between 3D gesture recognition and 2D gesture recognition is that the input required for 3D gesture recognition is deep information, which makes 3D gesture recognition much more complicated than 2D gesture recognition in both hardware and software. . For general simple operations, such as just wanting to pause or resume playing while playing a video, a two-dimensional gesture is sufficient. But for some complex human-computer interactions, interacting with 3D scenes must contain depth information.

Two-dimensional hand recognitionTwo-dimensional hand recognition, also known as static two-dimensional gesture recognition, identifies the simplest type of gesture. This technique can recognize several static gestures, such as a fist or a five-finger open, after acquiring two-dimensional information. Its representative company is Flutter, which was acquired by Google a year ago. After using his family's software, the user can control the player with several hand types. The user lifts the palm of his hand and puts it in front of the camera, and the video begins to play; then the palm of the hand is placed in front of the camera and the video is paused.

"Static" is an important feature of this two-dimensional gesture recognition technology. This technique only recognizes the "state" of the gesture, but does not perceive the "continuous change" of the gesture. For example, if this technique is used in guessing, it can recognize the gesture state of stones, scissors, and cloth. But for other gestures, it knows nothing about it. Therefore, this technology is a pattern matching technology. The computer vision algorithm analyzes the image and compares it with the preset image mode to understand the meaning of the gesture.

The shortcomings of this technology are obvious: only the preset state can be identified, the scalability is poor, the control feeling is weak, and the user can only realize the most basic human-computer interaction function. But it's the first step in recognizing complex gestures, and we can actually interact with gestures through computers, or is it cool, isn't it? Imagine that you are busy eating, just make a gesture out of thin air, the computer can switch to the next video, it is much more convenient than using the mouse to control!

Two-dimensional gesture recognitionTwo-dimensional gesture recognition is a bit more difficult than two-dimensional hand recognition, but still basically does not contain depth information, staying on the two-dimensional level. This technology not only recognizes the hand shape, but also recognizes some simple two-dimensional gestures, such as waving at the camera. Its representative company is PointGrab, EyeSight and ExtremeReality from Israel.

Two-dimensional gesture recognition has dynamic features that track the movement of gestures and identify complex movements that combine gestures and hand movements. In this way, we will truly extend the scope of gesture recognition to the two-dimensional plane. Not only can we control the computer play/pause through gestures, but we can also implement the complex operations of moving forward/backward/upward page/down scrolling that require two-dimensional coordinate change information.

Although this technology is no different from the two-dimensional hand recognition in hardware requirements, it can get more abundant human-computer interaction content thanks to more advanced computer vision algorithms. In the use experience, it has also improved a grade, from pure state control to a relatively rich plane control. This technology has been integrated into the TV, but it has not yet become a common control method.

3D gesture recognitionNext, we are going to talk about the highlight of today's gesture recognition field - 3D gesture recognition. The input required for 3D gesture recognition is depth-containing information that identifies various hand shapes, gestures, and actions. Compared to the first two two-dimensional gesture recognition techniques, 3D gesture recognition can no longer use only a single normal camera, because a single normal camera cannot provide depth information. To get deep information requires special hardware, there are currently three main hardware implementations in the world. Three-dimensional gesture recognition can be achieved by adding new advanced computer vision software algorithms. Let's let Xiaobian give you a 3D imaging hardware principle for 3D gesture recognition.

1. Structure Light

The representative application of structured light is the Kinect generation of PrimeSense.

The basic principle of this technique is to load a laser projector, and place a grating with a specific pattern on the outside of the laser projector. When the laser is projected through the grating, it will refract, so that the laser will eventually fall on the surface of the object. Produce displacement. When the object is closer to the laser projector, the displacement caused by the refraction is smaller; when the object is farther away, the displacement caused by the refraction will correspondingly become larger. At this time, a camera is used to detect and collect the pattern projected onto the surface of the object. Through the displacement change of the pattern, the position and depth information of the object can be calculated by an algorithm, thereby restoring the entire three-dimensional space.

In the case of Kinect's structured light technology, because of the displacement of the drop caused by laser refraction, the displacement caused by refraction is not obvious at too close distance. Using this technique, the depth information cannot be calculated too accurately. Therefore, 1 to 4 meters is the best application range.

2. TIme of Flight

The Flying Time is a technology used by SoftKineTIc, which provides Intel with a 3D camera with gesture recognition. At the same time, this hardware technology is also used by Microsoft's new generation Kinect.

The basic principle of this technique is to load a light-emitting element, and the photons emitted by the light-emitting element are reflected back when they hit the surface of the object. Using a special CMOS sensor to capture these photons emitted by the illuminating element and reflected back from the surface of the object, the photon flight time can be obtained. According to the photon flight time, the distance of the photon flight can be derived, and the depth information of the object is obtained.

Computationally speaking, the flying time is the simplest in 3D gesture recognition and does not require any computer vision calculations.

3. Multi-angle imaging (MulTI-camera)

The representative product of the multi-angle imaging technology is the same name product of Leap MoTIon and Fingo of Usens.

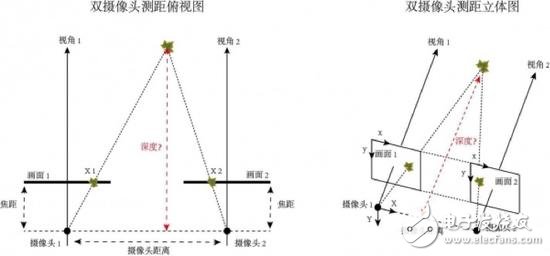

The basic principle of this technique is to use two or more cameras to simultaneously capture images, as if humans used both eyes and insects to observe the world with multiple eyes, by comparing the differences between the images obtained by these different cameras at the same time. , using algorithms to calculate depth information for multi-angle 3D imaging.

Here we briefly explain by imaging two cameras:

Dual camera ranging is based on geometric principles to calculate depth information. Using two cameras to shoot the current environment and get two different perspective photos for the same environment, in fact, simulates the principle of human eye work. Since the parameters of the two cameras and the relative position between them are known, as long as the position of the same object (Maple Leaf) in different pictures is found, we can calculate the distance of the object (Maple Leaf) from the camera by algorithm. The depth is gone.

Multi-angle imaging is the lowest hardware requirement in 3D gesture recognition technology, but it is also the most difficult to implement. Multi-angle imaging does not require any additional special equipment and relies entirely on computer vision algorithms to match the same target in both images. Compared with the disadvantages of high cost and high power consumption, the two techniques of structured light or optical flying time can provide three-dimensional gesture recognition effect of “cheap and good qualityâ€.

This article refers to Wang Yuan's article "Analysis of mainstream optical gesture recognition technology applied to VR/AR"

Fridge Guard,5A-13A Fridge Guard,5A-13A Surge Protector,13A Fridge Guard Surge Protector

Yuyao Huijun Electrical Appliance Co., Ltd. , https://www.yyhjdq.com